Arrieta A. B., Díaz-Rodríguez N., Del Ser J., Ben- netot A., Tabik S., Barbado A., García S., Gil-Lopez S., Molina D., Richard B., Chatila R., Herrera F. (2020). Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges Toward Responsible AI. Information Fusion, 58, 82–115.

Chromik M., Eiband M., Buchner F., Krüger A., Butz A. (2021). I Think I Get Your Point, AI! The Illusion of Explanatory Depth in Explainable AI. 26th International Conference on Intelligent User Interfaces, (pp. 307–317).

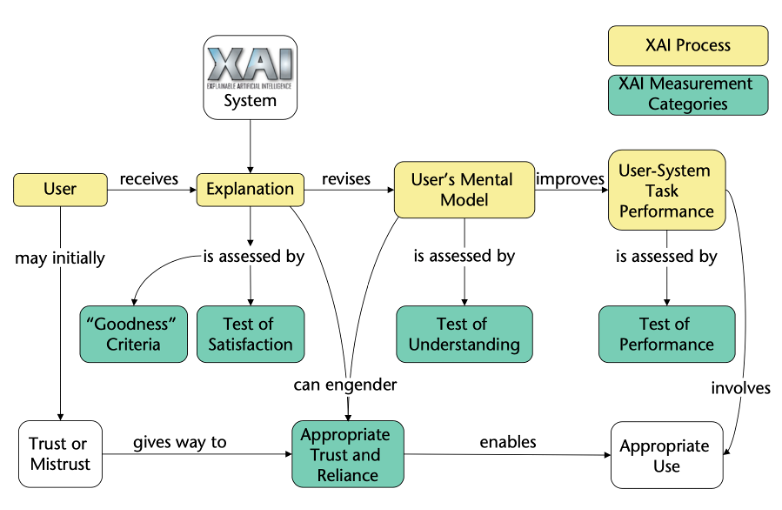

Gunning D. & Aha D. W. (2019). DARPA’s Explainable Artificial Intelligence Program. AI Magazine, 40(2).

Huvila H. (2021). Information Behavior and Practices Research Informing Information Systems Design. Journal of the Association for Information Science and Technology, 1–15.

Neerincx M.A., van Vught W., Henkemans O.B., Oleari E., Broekens J., Peters R., Kaptein F., Demiris Y., Kiefer B., Fumagalli D. (2019). Socio-cognitive engineering of a robotic partner for child’s diabetes self-management. Front. Robot, 1–16.

Poursabzi-Sangdeh F., Goldstein D. G., Hofman J., Wortman J., Wallach H. (n.d.). Manipulating and measuring model interpretability. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, (pp. 1–52).

Ridley M. (2022). Explainable Artificial Intelligence (XAI). Information Technology and Libraries, 41(2).

Schoonderwoerd T., Jorritsma W., Neerincx M., van den Bosch K. (2021). Human-centered XAI: Developing design patterns for explanations of clinical decision support systems. International Journal of Human-Computer Studies.

Speith T. (2022). A Review of Taxonomies of Explainable Artificial Intelligence (XAI) Methods. Association for Computing Machinery.